Home

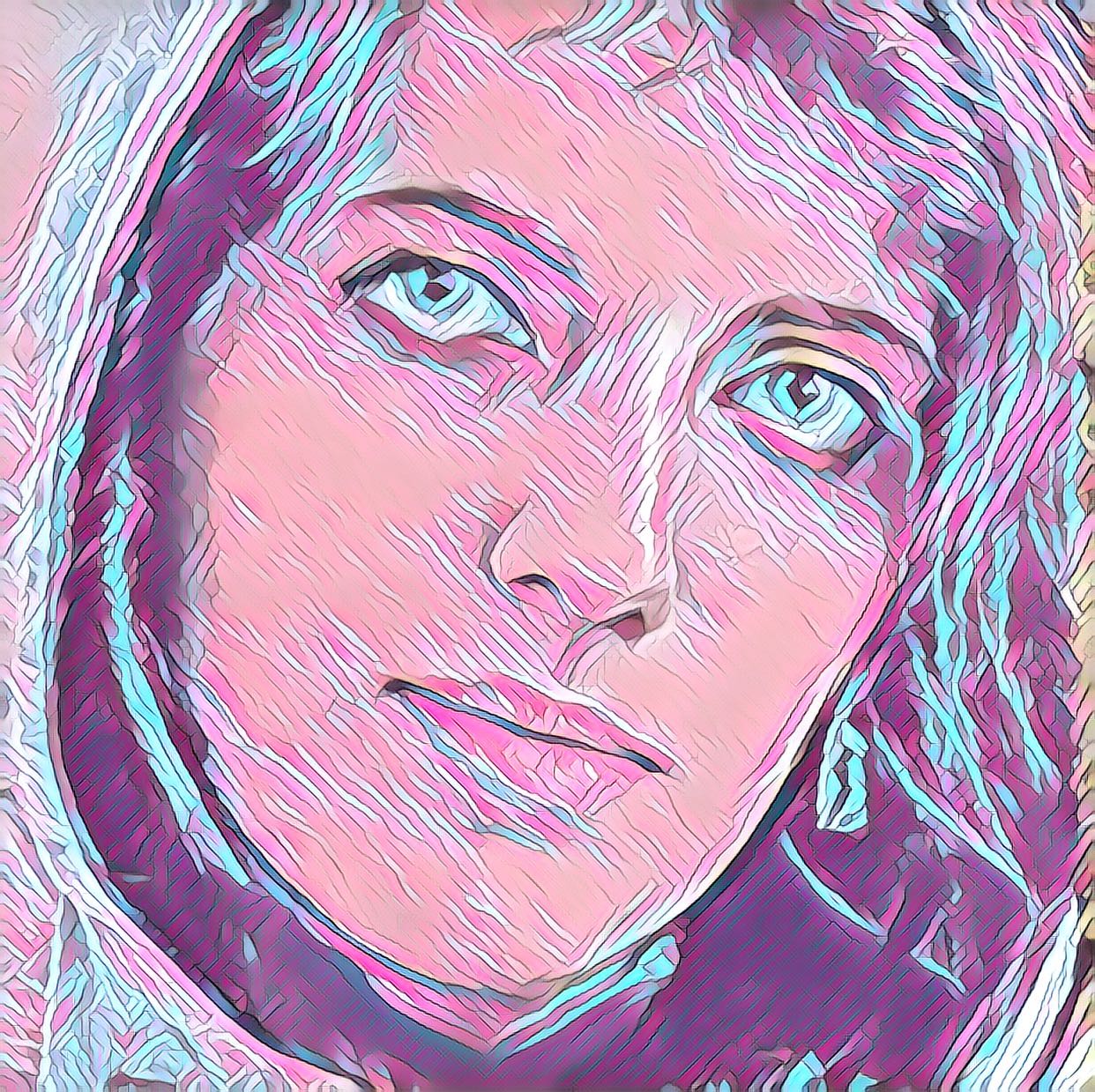

I am Zahra a Ph.D in Computer Science at Arizona State University. I work at Yochan research group directed by Prof. Subbarao Kambhampati. My general research interest is in developing cutting-edge computational models and frameworks for trust and understanding human cognitive states to enhance human-AI interaction. I have extensive research experience in diverse areas such as automated planning, AI, human-AI interaction, human-robot interaction, reinforcement learning, machine learning, behavior modeling of humans using data, statistical modeling, decision-making frameworks, and game theory. You can find the latest version of my CV here.

Publications

Experiences

In this role, I was responsible for developing modeling framework to understand and predict human cognitive states, modeling dynamics of human behavior for human-automation interactions, and creating and validating tools to optimize system performance based on predicted human states.